Understanding Google My Business & Local Search

Spam In, Garbage Out – Why Google’s Recent Paper on Map Spam is Flawed

The claim made by Google that “…fewer than 0.5% of local searches lead to fake listings” in Google search is NOT a conclusion that can be drawn from Google’s recently published paper. This number understates the number of local searches that lead to the visibility of fake listings due to its assumptions and flawed methodology. And it may do so by a large margin.

The claim made by Google that “…fewer than 0.5% of local searches lead to fake listings” in Google search is NOT a conclusion that can be drawn from Google’s recently published paper. This number understates the number of local searches that lead to the visibility of fake listings due to its assumptions and flawed methodology. And it may do so by a large margin.

The paper, Pinning Down Abuse on Google Maps, while providing interesting insights into Map spam from 2014 and 2015 is fundamentally flawed in its approach to the question of fake listings in Google Local and in no way warrants the optimistic conclusion that Google noted1 on their blog.

Definitions

First you need to understand what Google defines as a fake listing for the purposes of this study. It only includes listings that were in gross violation of the guidelines and caught by their algo or human curation and suspended.. This excludes any listing that manipulated its name or any listing that had manipulated their reviews2. And more importantly it excludes fake listings that their algo didn’t catch.

Then you also need to understand what Google means when they say that “0.5% of local searches lead to fake listings”. They are not saying, like I erroneously thought, that only .5% of the listings are fake. They are saying that the listings that were fake & suspended were up for an average of X days and only seen Y times during that time. I.E. Compared to total searches, fake listings constituted .5% of the user’s impressions in search and Maps. But as I will detail even the visibility assumption is flawed.

The Good, The Bad & The (very) Ugly of the Google Research & Blog :

The Good

I think that it serves the industry and the public3 to know more about Map spam and to understand which sectors are likely to experience it. I laud the transparency, as limited as it has been.

We can also presume, given the noted drop in fake listing creation, that Google has closed many of these loop holes that were leading to spam at such large scale.

The paper also makes the point that it isn’t just the existence of fake listings that matter. What matters as much or perhaps more is whether they are seen by searchers. And many fake listings that we as professionals might uncover, might never be seen at all because they are buried too deeply in the ranking hierarchy.

That is a critical point in analyzing Map spam that is all too often forgotten.

The Bad

Factual errors? One hopes that a paper of this caliber would hew to academic standards and document facts and do a thorough literature review.

This paper did neither. The literature review was very thin and statements to the affect that Map Spam is new and early manifestations occurred in 2015 are untrue on their face4. We saw high volume “on-premise” affiliate Maps spam in the florist industry in September of 2008 and massive “on-call” locksmith spam soon after.

In this paper, we investigate a new form of blackhat search engine optimization that targets local listing services like Google Maps. … Early forms of attacks included defacement, such as graffiti posted to Google Maps in Pakistan.5

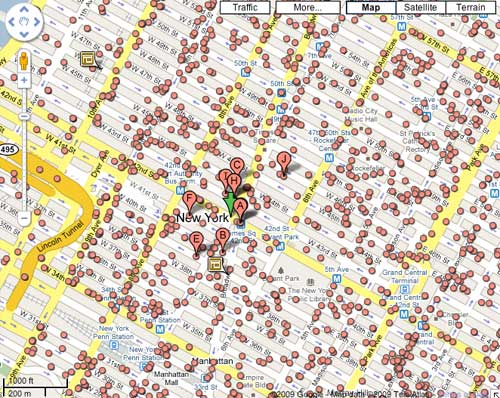

Another problem is the big data, global approach and tone that this paper takes. Local listing spam is a hyper-local phenomenon that impacts high density markets and high value verticals. Lumping views of those listings in with views of rural churches and government offices makes no sense. This sort of “average” conclusion creates an impression that may be statistically true (although it’s not in this case) but that is also very misleading6.

The paper noted this issue but didn’t deal with it in a systemic way in the publication:

Even so, such impressions can vary across geographic locations. In particular, users in West Harrison, NY were the most affected—where 83.3% of the search results for locksmiths were abusive. In contrast, 15.6% of search results for locksmiths in New York City were abusive.

A final issue, and maybe this can’t be easily overcome7, is the assumption that is made in calculating how often fake listings are seen by searchers.

Assuming a uniform query rate, this average approximates the likelihood a user would encounter an abusive listing if Google Maps selected listings uniformly at random rather than based on search quality. Effectively, this metric discounts the (in)effectiveness of any particular listing’s SEO

Translated that says that for the purposes of the analysis, one listing will be seen as often as the next and that there is no difference due to SEO.

Given Google’s heavy weighting of a business name, any decent fake listing isn’t just going to use a fake address. They will obviously choose a name that ranks well on a high value, high frequency keyword search. And as we all know, with that keyword laden name, it will be much more visible than the average listing.

The Ugly: Problems with methodology

Small factual errors, disappointing definitions and doubtful assumptions are troublesome but do not, in and of themselves, call into question the conclusion. Flawed study design does.

One might forgive some of these sins if the over arching methodology were sound. It isn’t.

Google provided the researchers8 with listings that were suspended during the research period. The study ONLY included fake listings that had been removed from the index. It makes NO effort to estimate the fake listings that remained in the index undetected during the study period.

Limitations to our approach: Our study is biased towards abuse caught by the suspension algorithms employed by Google Maps.

The main limitation with this approach is that we cannot estimate the number of false negatives, i.e., abusive listings overlooked by Google Maps.

Thus Google’s blog post conclusion that fewer than 0.5% of local searches lead to fake listings is like a policeman saying “I spent an hour at 5 am looking for speeders. I saw ten but charged five. I saw 100 cars go by, therefore 5% of all cars speed. But I was talking to my wife for some of that time so I may have missed a few.”

A simple thought experiment, based on recent findings, can show the rough impact of this study design decision. In November 2016, Google implemented advanced verification for plumbers in San Diego. 89% of all listings were dropped from the visible index and prevented from showing. One assumes that most of them were fake by the standards of the guidelines.

According to this paper, Google tightened up verification in July 2015. And yet the even tighter advanced verification uncovered 900% more fake listings than the algo suspended. In other private research we saw similar results in the locksmith arena. It bears noting that this was in just one midsize market that is not at the epicenter of Map spam. These were listings that, during the period of the study, were trusted in Google Maps and were frequently visible in the search results but remained uncounted.

Clearly their methodology grossly under counts the number of fake listings and how often they are seen by searchers.

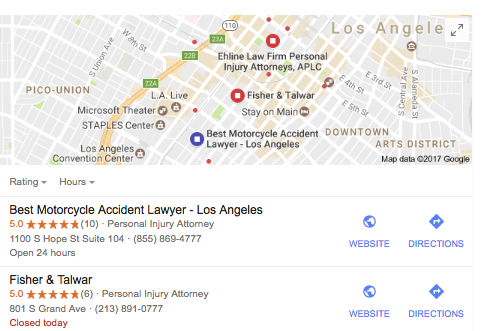

Beyond plumbers etc, we know that lawyers broadly abuse virtual offices as well. Because they are not targeted by the algo, these are completely missing from the analysis9. We also know that UPS and drop boxes continue to be abused agressivlely and many of the listings using that method were obviously missed. These numbers of unsuspended fake listings that are in the live index add up.

Using only those listings that were suspended by the algo as the basis for this analysis invalidates the conclusion. The best that could be said from this data is that “fake listings that we allowed into the index and were subsequently suspended were seen in local search results 0.5% of the time”.

Conclusion:

This study offers some interesting data. But the conclusions put forth are not warranted by the research. The visibility number offered by the paper is a lowest possible estimate of the visibility of fake listings, not anything more.

Who knows what the real number of fake listings in the Maps index is or how often they might be seen?

This research does a good job showing us what segments have been targeted by Google, which markets they were in and some of the techniques they used. It does little to shed any light on the questions of fake listing visibility to the average searcher. Or more importantly to the average searcher in markets where fake listings are prevalent.

We can perhaps give the researchers some slack in this. They did make some attempt to position their summary within the obvious limitations of their data. Although not persuasively in my opinion.

On the other hand, for the Google research blog to proclaim the results with NO qualification is at best cynical and at worst deceptive.

1 – Google Research Blog: Keeping fake listings off Google Maps hrrmph! Should have read: Listings we kept off of Google Maps.

2 – Which is more fake and does more consumer harm? A locksmith with a fake address or a chiropractor with 100 fake reviews? It very well could be the later.

3 – The list of the top ten is filled with the usual suspects: Locksmiths 25.7% Plumbers, electricians 14.6% Restaurants, pizza delivery 7.3% Motels, hotels, bed-and-breakfast 5.4% Clothing stores, beauty salons 3.8% Lawyers, consultants, accountants Limousine, taxi, travel agents 1.9% Car repair, towing, dealers 1.7% Photographers, graphic designers 1.5% Movers, packers, shippers 1.5%. But bail bonds, Internet service providers, real estate agencies, and dating agencies didn’t fail to get mentioned. I am sure that “internet service providers” likely equals SEOs.

4- The starting dates for Map spam weren’t the only instances of factual errors. It was noted that Google Maps provides 4000 categories from which to choose. The number is actually closer to 2500 3500. Regardless factual errors, even small ones, reduce the credibility of the paper. And given that four of the paper’s authors work at Google, it is hard to understand how this assertion was left to stand.

5 – Massive spam of both types noted by Google were prevalent AT LEAST six years prior to the study. A simple Google search on the phase locksmith spam quickly show a result from 2011. Maybe seeing Map spam was new for them but that doesn’t excuse the factual errors. .

6 -Brings to mind the phrase as noted in Wikipedia: ‘”Lies, damned lies, and statistics“: a phrase describing the persuasive power of numbers, particularly the use of statistics to bolster weak arguments. It is also sometimes colloquially used to doubt statistics used to prove an opponent’s point.’ Although often attributed to Mark Twain, it appears to be from Benjamin Disreali. Who knew?

7 – Google does track impressions down to the listing level so it is conceivable that the data is available and could be integrated into this study. No effort appears to have been made to do so.

8 – While the lead researcher, Danny Yuxing Huang, is a 5th-year Computer Science Ph.D. at University of California, San Diego four of the other authors work for Google. At least one of the Googlers (Abishek Kumar, Team Lead of the Local Guides) is involved in the local space.

9 – I have often wondered why lawyers are treated so differently than plumbers and locksmiths. Both are violating the guidelines in much the same way and yet plumbers and locksmiths are targeted for removal while lawyers, for the most part, are given a pass and are rarely removed for the very same offense.

© Copyright 2026 - MIKE BLUMENTHAL, ALL RIGHT RESERVED.

Comments

9 Comments

Good analysis on this. Google fanboys (and fangirls) take note!

Mike, your writing, without fail, gets me pumped up and re-motivated about ethical local SEO. Thank you!

@Kyle

That’s the nicest thing someone has said to me in a while. Thank you! Just because we live surrounded by snakes doesn’t mean we have to be a snake!

Thanks for the alternate world view Mike. I found it interesting that UCSD and Google released it at the same time. It seems like Google is paying for research to present in court cases.

Meanwhile our case against Google moves forward! US District court says Google must defend. http://nationallocksmithissues.com/wp-content/uploads/2017/01/Filed-Copy-First-Amended-Complaint-Baldino-v-Google-January-13-2017.pdf

Brilliant analysis Mike, I also read the Google Research blog post and couldn’t believe how misleading and optimistic their conclusions were.

Google need all the help they can get from users and the search industry to tackle this problem but they’re seriously downplaying the effectiveness of Maps spam. I’m not surprised the number of active and suspended listings used in the study is confidential. They won’t even reveal this number as a fraction.

Let’s face it. Google is struggling to get things done under its own weight. They’ve made a ton of mistakes along the way with the GMB and GBV/SVT programs and they continue to do so due mostly to a lack of coordination amongst their teams. But for them to admit that this is at the core of the issue would be simply bad for business.

The fact is, playing on the edges of open source content submission and setting rules and restrictions to prevent abuse is a bloody tough game. What we also have to take into consideration is…. is there a map system right now that is as well populated with correct data that also has the same market penetration in use? No. All the other maps are relatively just as bad but fall far short in usage and updated content.

I for one would love to see more competition from Apple Maps, Here Maps (Facebook’s choice) or the like and then maybe there’d be more attention to this and less likely to have these false claims due to potential competitors calling them out on it.

In any case, as always, Mike your articles are brilliant. Not sure there are many people that go into the depth you do and it’s much appreciated!

@Marc

Thanks. I agree that Google’s methodology does produce one of the better business lists in the market. I do find though that their mapping is no longer more accurate nor more functional than Apple’s. Here, unfortunately, is still in the weeds.

But better business listings at the expense of a great deal of spam makes their product useless and in fact dangerous to use in certain verticals in certain markets. Their attempt to gloss over that with this research is ill advised.

And their unwillingness to spend money on fixing and preventing abuse is appalling to me. The fact that they have become the defacto utility means that they have obligations beyond just profit.

I find their manipulation of the news in this context unpalatable.

PS I appreciate the value you put in my “work”. Thanks

Personally, I’m very discouraged and depressed about GMB spam. I’ve been reporting local spammers for weeks on Google Maps and my edits are still pending. I’ve tried GMB forums and nothing happened too. I still don’t have an idea how to fight this and I really think we need to push this as a team to Google. Thanks, Nick.

Hello Mike,

Google has been taking lots of actions to fight spam & accordingly changing its algorithm as well. But am agree with you that Google has not succeeded in fighting spam when it comes to fake local business names.

Businesses are taking advantage by using keyword optimized (Over Optimized) business names. Recently I came across https://moz.com/blog/local-seo-spam-tactics-are-working-how-you-can-fight-back where we can see such type of spam clearly. Google will have to take action against such business listings.

Thanks for the in-depth analysis on the Google research paper.

Comments for this post are closed.