Understanding Google My Business & Local Search

Google Photos- A Visual Graph of People, Places and Things. Can It Become Their “Everything Graph”?

Google Photos, positioned by Google as a GMail for photos, is an incredible product. Incredibly amazing, incredibly scary. It does well what Google does well.

Update: If you are interested in learning more about the technology behind Photos and what it is capable of read this article: How Google’s New Photos App Can Tell Cats From Dogs.

It provides unlimited storage for all of your photos and then proceeds to organize them for you. For the first time, probably in your life (at least in mine), you actually have a library of photos that has been organized in some meaningful way. All organized in much the same way and with the same connections that you have in the real world…

Let’s leave the very obvious and significant privacy implications aside and the fact that our government is likely in possession of similar technology and look at the way the product is organized and how it very well could influence the future of search.

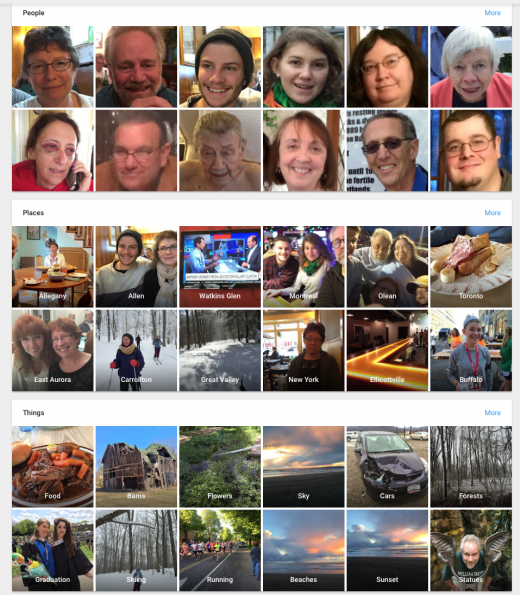

People, Places and Things is the main organizing metaphor for Photos.

Sound familiar? It should as it is the same organizing principle of Google Plus and of the Knowledge Graph, the tech underlying much of Google’s current advances.

Sound familiar? It should as it is the same organizing principle of Google Plus and of the Knowledge Graph, the tech underlying much of Google’s current advances.

Google manages to (mostly) successfully arrange every photo that you have ever taken into the right category… and often at an incredible level of granularity. And I have taken a lot.

People. Google’s ability to recognize people is amazing. They can pick out a person that is in the far distance or on the periphery of a busy scene. Clearly they can find faces and match them to a known set with very little data and from a photo with a lot of noise. Google is able to match the person in different photos despite bad lighting, partial side views, headwear and glasses that are not normally there.

Here is a range of photos from which Google was able to “pick” out my sister successfully whether covered in a medical gown, displaying black eyes, under exposed in the back of photo or in a crowd:

Places. That’s the relatively easy one. Almost every photo these days comes geotagged so Google knows, at least within a 100 feet or so of where it was taken. They don’t yet auto assign a specific location but they show incredible accuracy in auto assigning the photos to a city level. I assume that Google has more granular insights but has not yet turned them loose for fear of a privacy backlash.

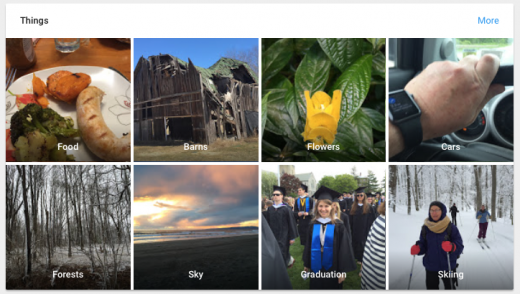

Things. Google is able to characterize a wide range of entities from food to weddings, from ruins to statues. All automatically and all with a fair degree of accuracy.

In each of these categorizations Google displays the top 12 items that fit in each category. And in each it is amazing the “graph” that results.

For example in top 12 People display Google shows in this order:

My wife

Me

My son, daughter and step daughter

My step mother

My sister

My brother in law

My (now deceased) father

A couple that are my best friends

My son in law

And as you dig deeper by looking at “more”, next up you will see my peers and frequent co-presenters from Local U. That is an amazing take on my social graph.

Has Google connected the dots to the level of actually “knowing” who is in my real social circle? I am not sure and they certainly are not saying so.

From an article in the Huffington Post, Anil Sabharwal, the product lead had this to say:

Currently, Google Photos doesn’t let you give specific names to people or things, so you’re stuck typing in “cat” even if you’re only looking for your beloved pet Rex. But Sabharwal said that could change in the “next quarter or so.” An individual using Google Photos would manually be able to apply a name to someone who appears in their photographs and search according to that name moving forward.

Similarly, emotional recognition may be on the way. Sabharwal said Google Photos would ideally be able to sort according to any number of keywords that one might use to describe a photo — like facial expressions.

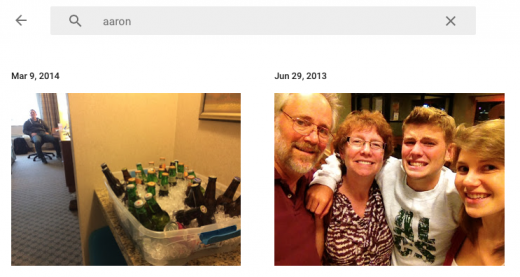

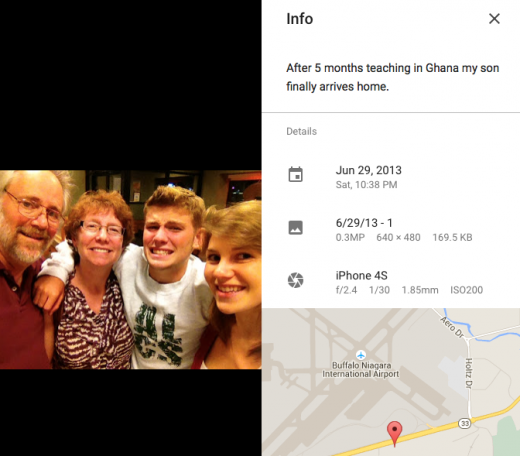

They have not yet started to comprehensively match names to the faces, at least not publicly, but there are indications within Photos that they are able to do so. But some of that capability is already in the product. For example I searched on the word “Aaron” and up came photos with my son AND Aaron Weiche. In the case of Aaron Weiche I had noted his name in a G+ post so we know that Google is looking there for information. But in the case of my son, I am not really sure where they picked it up.

Obviously once folks start “tagging” images then there will be trove of cross account information allowing the easy identification of the identity of who is in a given photo and who else they frequently appear with. But even without that there are plenty of signals across the web in file names, captions, web sites for Google to start making reasonable associations to inform the social graph.

As to Places and Things Google obviously is able to ascertain both what and where. With the current stated granularity, Google is able to identify accurately a very broad range of “things” in terms of both objects and activities. One assumes that they will be able to identify very specifically not just a flower but the kind of flower. Or not just that it is food, what the dish is. If they can identify a “black cat” won’t they also be able to ascertain the difference between sausage and pasta? And when matched against a rich snippet menu make an intelligent guess about the dish name?

And while the “where” might be limited to the 100′ accuracy in the built in geo data in the photo itself, with the addition of both wifi and subject context, Google should be able to narrow down location in the photo to a given business quite easily.

And by comparing photos across accounts they will be able to ascertain the popularity of given businesses and, at some point, what the most popular foods are.

Google just might be able surmise not just where I live and where I travel but where and what I eat, who I am friends with and what my interests are. They will be able to (if they haven’t already) create a composite “graph” of me that just might provide more insight than the very limited, professional view they get of me on either G+, Facebook or Twitter. Or even from my search history.

With this amount of local context they could also make reasonable judgements about the types of places I might like to eat when I travel, the type of recreation I partake in and how I spend my leisure time.

Even without the other signals I provide Google the amount of information about me in Photos is a gold mine and appears to me to have put in place a foundation for a personal “everything graph”.

When tied together with my reviews, my searches and my social sharing and the location of my phone, it could provide the sorts of details that will make search in general and local search in particularly powerful.

My sense is that the predictive search capabilities of Google Now are just getting started in local.

Update: “I’ve grown to depend on the Google Now service as a means of managing my travel itineraries because it’s one step ahead of me having to ask,” Horowitz says. “In the same way, if I thought we could return immense value to the users based on this data, I’m sure we would consider doing that.” From: How Google’s New Photos App Can Tell Cats From Dogs.

© Copyright 2025 - MIKE BLUMENTHAL, ALL RIGHT RESERVED.

Comments

6 Comments

I do like the new interface. I’ve NOT been a fan of the Google+ photos interface, and in fact, I always click the link to go back to the Picasaweb interface when sharing albums.

The artificial intelligence bit is working well with spotting people, but it seems to be struggling a bit with things. It’s created a “Cars” folder which is about 50% boats, with some planes thrown in. Oh, and yes, some cars too. Places are being picked up on well, I suspect simply because I’ve named the albums according to easy-to-deduce place names (Paris, Carcassone, Otter Rock, etc.). The majority of my photos aren’t geo-tagged (they’re from my Nikon D5200, for which I have a geotagging attachment but don’t use it much).

@Michael

I would estimate that Google got my places nearly 100% (most of my images are geo-coded) and my “things” were in the 90-95% accurate range. There were some errors and no obvious way to train the machine. Regardless the associations that can be drawn are incredible. The software is sueful too.

Using Google Photos desktop, is there a way to edit the places, people, or things tags (collections or whatever their called)?

For me, tagging is something that needs to be 100%. If it’s any less, and the chance is there that I can’t find the one photo I’m looking for, then it’s just no good.

I’m okay with Google automating everything, but only if they include ways for the user to make corrections.

@Troy

At this point I see no way to affect what things, people or places photos are assigned to. It makes sense that they too would want that feature but it didn’t make it into this version although it is very obviously needed.

Going to have a look – thanks Mike

I wish they would allow us to add “tags” – preferably with the ability to circle the “thing” – which could be added to the database the algorithms depend on. While fairly accurate, it’s far from perfect; hence I find turtles in my birds and snakes in my lizards (which is okay – for now). They should look at it and think of it as a type of crowdsourcing which is THE only way to go with megadata today – and they’ve got the crowds eager and ready to do it if only to organize our collections better ourselves.

Take a hint, Google, and do yourself a favor: tap on the free masses of photos and manpower you have in your user base to make your program’s smarter by letting us put more intelligence (or at least distinguishing factors) in it.

Comments for this post are closed.